This chapter covers results in the paper GSmoothGrad: removing noise by adding noise available at this Arxiv link.

The lecture slides are available here.

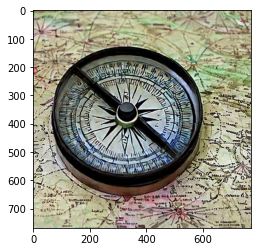

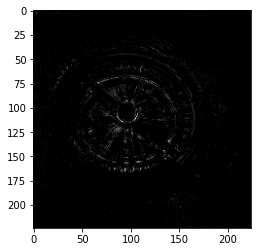

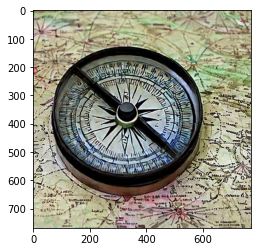

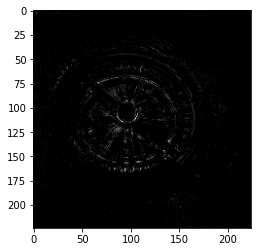

A minimal working example for creating an attribution similar to SmoothGrad for an input given a class label is presented in the Colab document. Here are a few illustrations generated by this minimal example:

| Image | Attribution |

|---|---|

|

|

|

|

|

|

|

|

Using any input benchmark of your choice such as ImageNet and any model of your choice such as ResNet101, write a Jupyter notebook to implement the SmoothGrad approach on an attribution method of your choice (20 points). Do not copy code from the minimal working example above.

Using your implementation, vary the strength of the noise added to the input, such as the variance of the normal distribution added to the input. Note down your observations about how the strength of the added noise has an impact on the subjective quality of the attributions being computed by your implementation. Support your observation using at least 3 different inputs. (20 points)

The computed attribution depends on the number of random samples generated from the input by adding the noise. Using 3 different inputs, study how the subjective (visual) quality of the attributions changes as the number of samples is increased from 3 to 50 in your implementation. (20 points)

The minimal working example uses a normal distribution as the noise source for creating random samples. Replace the normal distribution by any other noise source of your choice, such as a uniform distribution, and observe the impact on the subjective (visual) quality of the computed attributions. Perform your investigations on at least 3 different inputs. (20 points)

The minimal working example computes the arithmetic mean or an average of the attributions computed for each random sample and presents this arithmetic mean as the final attribution. Implement another approach, such as geometric mean, for combining attributions from random samples, and investigate the quality of the computed attributions on at least 3 different inputs. (20 points)

These assignments will be evaluated as graduate assignments, and there is no single correct answer that is expected. A variety of answers can equally satisfy the requirements in the above assignments.

Q1. I was wondering if you want the notebook to look like the example one, such as loading the model, the input, transformations, and attribution analysis.

A1. The code does not have to look like the example notebook. You can arrange the workflow in the code as you deem fit.

Q2. Do we have to use the ImageNet benchmark?

A2. You can use MNIST, CIFAR-10, or any benchmark data set of your choice. You can even use a data set of another modality, such as text, speech, EM signals, etc.